Welcome to my personal blog and website. I’m a software engineer who writes about technology, programming, and various projects I work on.

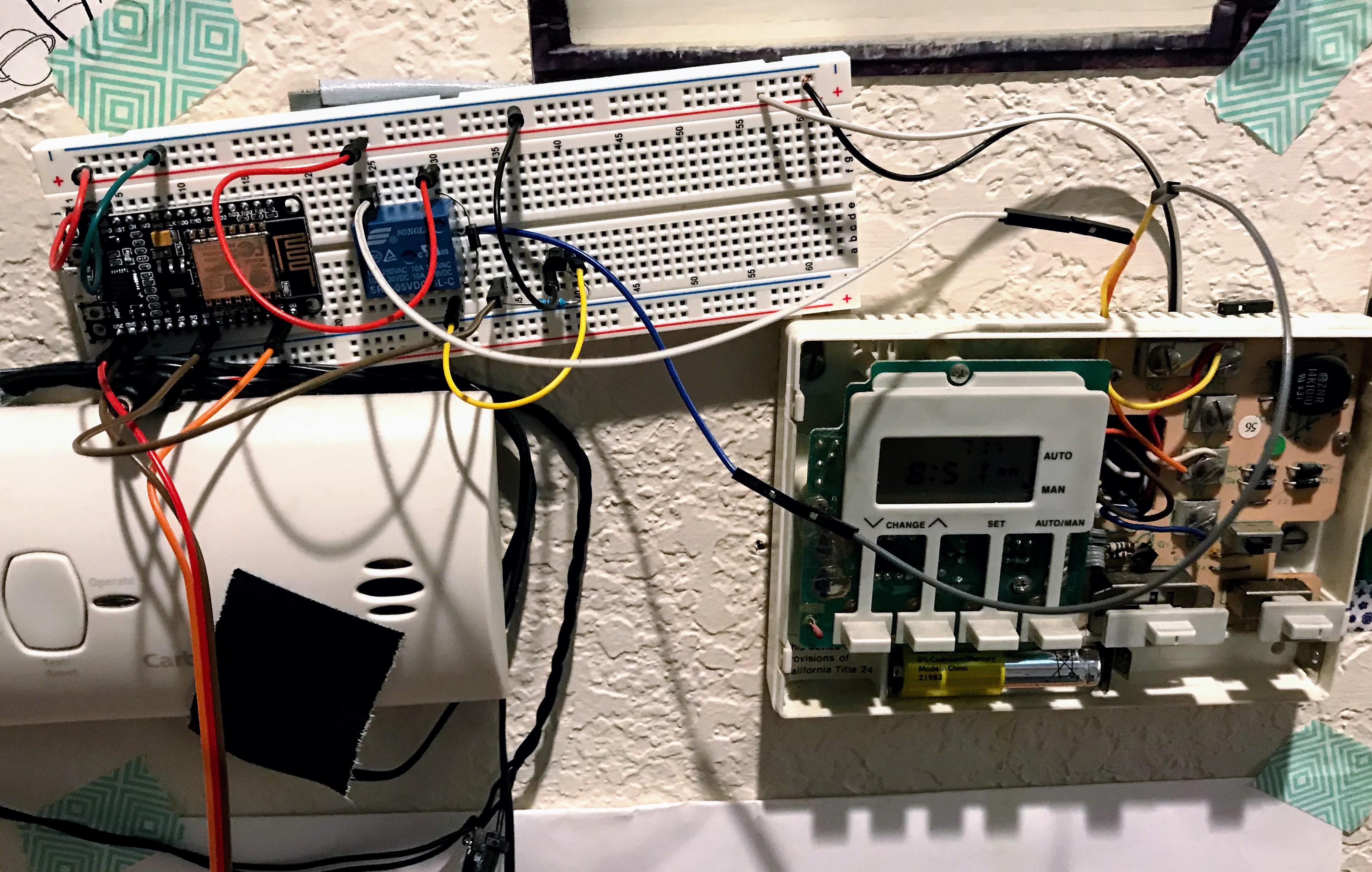

Building your own more expensive Nest: Arduino, Android, React Native.

I recently bought a used Fiat 500e, naturally I set out to reverse engineer the CAN bus in it. That led me to discover Arduinos (which I knew existed but had never used) as well as ESP8266s. I had previously used FHEM to wire up my heater in Germany but it felt outdated even in 2011, much more so now so I decided to go for something prettier.

I first build a prototype on a breadboard that could connect to our wifi and control the heater. The Arduino acts as a temperature sensor as well as a way of switching our heater on. It however does not implement any control mechanism, instead it subscribes and publishes to an MQTT server and the actual control-loop is implemented using Node-RED. This seems beneficial as it is much easier to maintain software on a Linux machine than OTA updating an Arduino. Also, it allows leveraging cool plugins available in Node-RED.

By Henrik Mühe

read moreReverse-engineering exact location information through trilateration.

Many datasets on the internet accidentally expose location information by allowing distance based search (“find everything within 3 miles around this point”) from arbitrary points of origin. I happened upon such a dataset and wanted to try rediscover the original location through trilateration.

Trilateration, which is commonly wrongly referred to as triangulation, is the

process of finding a location L given three other locations A, B, C and

their distance to L (let’s call that dA, dB and dC). Very surprisingly

to me, I was not able to find a lot of good, sure-fire solutions to this easily

when I wanted to solve a trilateration problem so there’s a summary of what I

found.

By Henrik Mühe

read moreAutomating allocation reports in Ledger.

Motivation

If you own any stocks or funds you probably want to track the allocation, meaning how much money is in stocks and funds respectively. Some fairly well known low key investment strategies rely on balancing this ratio, for instance this one.

In Ledger, allocations can be modelled easily through allocation based accounts and automatic transactions. This is outlined here. If you do this however, you still have to manually update allocations for every fund you own which is a nuisance, especially if you own something like a target retirement investment fund which naturally and purposefully changes its allocation strategy over time.

By Henrik Mühe

read moreAutomating commodity prices in Ledger.

Motivation

If you use Ledger CLI, chances are you will use more commodities than just dollar. This is actually one of Ledger’s best features. For instance, I track our 401k in Ledger and it allows me to list the ticker symbols of the funds we have purchased inside the 401k as well as the fact that this is pre-tax money. Our opening balances look something like this:

2016/07/19 * Opening Balance

Assets:Checking $200.00

Assets:Investments:401 3.1 VFIFX @ p$29.8

Assets:Investments:401 2.5 VTTSX @ p$29.8

...

Income:Opening Balances

Roughly, this means that we have two different funds in our 401k with ticker

symbols VFIFX and VTTSX respectively. Also, the funds are not valued in dollars but

in p$ which is our commodity for pre-tax money. The idea is that this money,

if accessed today, has a much lower actual value because we’d have to pay

federal, state and penality taxes on it. Therefore, the price database for

this looks like this

By Henrik Mühe

read moreLoading Chase.com transactions into Ledger

When trying to migrate away from Mint to Ledger, one of the major obstacles is automating the work of entering every 3 dollar coffee shop transaction manually. Ledger does provide some help in this area through the use of ledger xact, yet as of today, we enter 25 transactions per week into ledger and a large chunk of them is from our Chase credit cards. Automation seems to be the easiest way to make this a lot less painful.

By Henrik Mühe

read more